The Not Equal Bits Leak Out workshop, which was held in the Computational Foundry at Swansea University on the 15th November looked at how we can navigate the potential risks and opportunities when it comes to algorithm service delivery.

Ian Johnson, Doctoral Trainee at Newcastle University shared his thoughts.

Scott Jenson a UX researcher at Google kicked off the day helping us understand some of the issues and cases around algorithmic decision-making, the internet of things and potential future projects. We then heard from Raymond Bond from Ulster University who shared with us the times when algorithms go wrong.

After a short presentation from Clara Crivellaro from Open Lab at Newcastle University, we broke into workshop groups.

My group selected a scenario that focused our attention on the potential biases in algorithm-driven processes to identify children at risk. This explore stage really helped my group to see the bigger picture but also to spotlight specific parts of the system for discussion and debate.

Our discussion quickly moved toward the training data, and specifically the establishment of a ground truth. What was the original dataset? When was it collected, and how? How were the categories of risk established, and by whom? What problems does this pose? We started to map this out concentrating on what data was coming in and when, and where decisions were being made.

As we mapped out what this algorithm would look like we began to see problems at different points. From the way data is originally categorised, for example high, medium, or low risk, all the way to how this would influence or impact the human decision-making process. Whether or not to knock on the door of a family home.

Our next task was to consider this across an individual, institutional, and structural level. We talked about the consequences of a false negative, and even in the first few seconds of this point coming up we were confronted with negative societal, personal and family impacts. But this was only one point in which we could foresee issues.

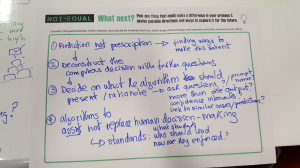

As this was only one afternoon, it was time to move on and to think about a response to the first set of issues we managed to identify in our given context. Moving onto the response stage of the workshop we decided what our initial ideas were in response to these issues. This was the start of considering the relationship between the machine and the human.

In particular, we talked about the role of the machine, stating the need to be clear about the predictive nature and avoiding the risks of letting the machine be prescriptive.

In other words, being clear that algorithms should assist, and the level at which they assist is important.

Finally, we discussed how the algorithmic output was important.

Rather than providing a percentage, or rating, or level of risk, we concluded (for now) that the output should be more descriptive. This could be for example, indicating confidence level in its own prediction, showing the exact factors it used to calculate, and even prompting the human actor to look into similar cases and to question the results presented to them.

Finally, we considered what these ideas might look like as a set of standards or design principles.

These early discussions will allow attendees to stay in touch through the Network and continue the conversation, along with the other workshop groups and the wider Network partners and members.

Hopefully we can pick up some of these discussion points and ideas at the next Network event at the Urban Sciences Building in Newcastle at the end of November.